Feature | Can you hear that diagram?

What does a diagram sound like? What does the shape of a sound feel like? At first sight, listening to diagrams and feeling sounds might sound like nonsense, but for people who are visually impaired it is a practical issue. Even if you can’t see them, you can still listen to words, after all. Spoken books were originally intended for partially-sighted people, before we all realised how useful they were. Screen readers similarly read out the words on a computer screen making the web and other programs accessible. Blind people can also use touch to read, which is essentially all Braille is, replacing letters with raised patterns you can feel.

The written world is full of more than just words though. There are tables and diagrams, pictures and charts. How does a visually impaired person deal with them? Is there a way to allow them to work with others creating or manipulating diagrams even when each person is using a different sense?

That’s what we have been exploring at Queen Mary University of London in partnership with the Royal National Institute for the Blind and the British Computer Association of the Blind. At the core of our work is the investigation of what we call cross-modal collaboration and how to design interactive technology to support it. This involves coming up with ways to transform visual representations like diagrams so they can be accessed using a sense other than vision – i.e. a different modality – as well as the design and implementation of systems to support people when working together using these alternative modalities.

Cross-modality

Our brains receive and combine information from different perceptual modalities to make sense of our environment, for instance when watching and hearing someone speak we are combining two signals from two different modalities, vision and audition. The process of coordinating information received through multiple perceptual modalities is fundamental and is known as cross-modal interaction. Cross-modality is therefore particularly relevant to individuals with perceptual impairments because they rely on sensory substitution to interpret information.

In the design of interactive systems, the phrase cross-modal interaction has also been used to refer to situations where individuals interact with each other while accessing the same shared space or objects through different sets of modalities. Current technological developments mean that it is increasingly feasible to support cross-modal input and output in a range of devices and environments, yet there are no practical examples of such systems. For example Apple’s iPhone provides touch, visual, and speech interaction, but there is currently no coherent way of collaborating using differing sets of modalities beyond a phone conversation if collaborators cannot see the shared screen.

Cross-modal diagram editor

In our work, we focused on making diagrams accessible to visually impaired individuals, particularly to those who come across diagrams in their everyday activities. Our solution is a diagram editor with a difference. It allows people to edit ‘node-and-link’ diagrams like a flowchart or a mind map. The cross-modal editor converts the graphical part of a diagram, such as shapes and positions, into sounds you can listen to and textured surfaces you can feel. It allows people to work together exploring and editing a variety of diagrams including circuit diagrams, tube maps, organisation charts and software engineering diagrams. Each person, whether fully sighted or not, ‘views’ the diagram in the way that works for them.

The tool combines speech and non-speech sounds to display a diagram. For example, when the label of a node is spoken, it is accompanied by a bubble bursting sound if it’s a circle, and a wooden sound if it’s a square. These are called earcons and they are designed to be the auditory equivalent of an icon. The labels of highlighted nodes are spoken with a higher pitched voice to show that they are highlighted. Different types of links are also displayed using different earcons to match their line style. For example, the sound of a straight line is smoother than that of a dashed line. The idea for auditory arrows actually came from listening to one being drawn on a chalk board. They are displayed using a short and a long sound where the short sound represents the arrow head, and the long sound represents its tail. Changing the order they are presented changes the direction of the arrow: either pointing towards or away from the node.

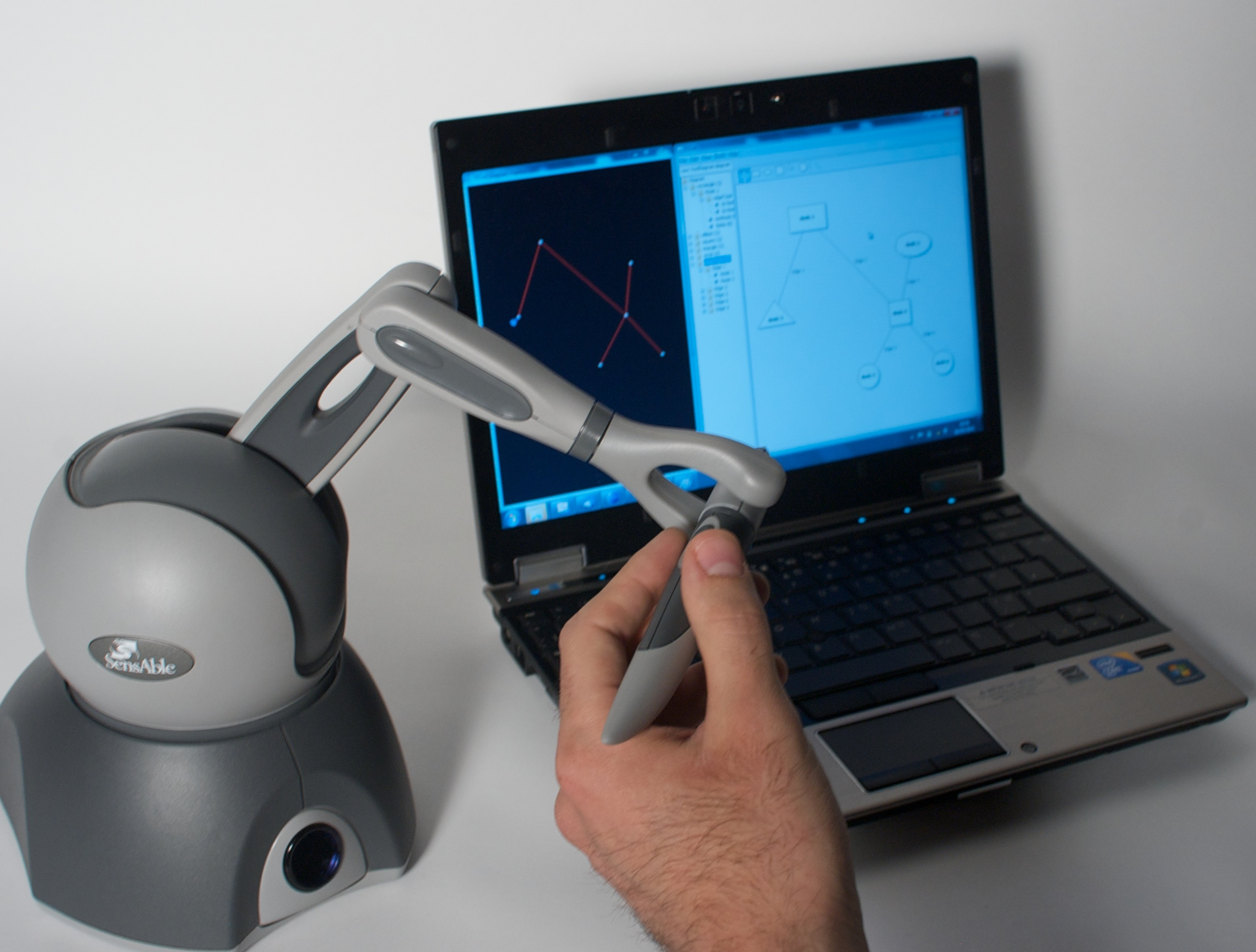

For the touch part, we use a PHANTOM Omni haptic device, which is a robotic arm attached to a stylus that can be programmed to simulate feeling 3D shapes, textures and forces. This device can be programmed to simulate rich physical properties, such as mass and most physical sensations that can be mathematically represented. In the diagram editor, nodes have a magnetic effect: if you move the stylus close to one the stylus gets pulled towards it. You can grab a node and move it to another location, and when you do, a spring like effect is applied to simulate dragging. If you let it go, the node springs back to its original location. Sound and touch are also integrated to reinforce each other. For instance, as you drag a node virtually in space, you hear a chain like earcon (like dragging a metal ball chained to a prisoner?!). When you drop it in a new location, you hear the sound of a dart hitting a dart board.

Evaluations in the wild

Another aspect of our work is to apply it in the wild. That is, rather than building and testing systems in the lab, which is the traditional way of conducting experimental studies, we deploy them in real world scenarios, with real users doing real tasks, and observe the impact of our technology on how users conduct their work. For example, we deployed our solutions in a school for blind and partially sighted children and observed how teachers and students use it during class sessions to explain concepts using diagrams or to complete exercises involving the design and editing of diagrams in groups. In another setting, we deployed the tool in a local government office where a blind manager used it with their assistant to update a chart of their organization’s structure (see video 2 below).

This novel approach to evaluating interactive systems in the wild has allowed us to not only gather feedback about how to improve the system we designed directly from its users, but also to understand the nature of cross-modal collaboration in real world scenarios. We were able to identify a number of issues related to the impact of cross-modal technology on collaborative work such as how it changes the dynamics of a classroom with children with mixed physical abilities.

Having tried out the editor in a variety of schools and work environments where visually impaired and sighted people use diagrams as part of their everyday activities we are happy to see it work well. The tool is open source, free to download so why not try it yourself. You might see diagrams in a whole new light.

Resources

- The Collaborative Cross-modal Interface project website

- Collaborating Through Sounds, PhD thesis, 2010

- Metatla, O., Bryan-Kinns, N., Stockman, T., & Martin, F. Supporting Cross-Modal Collaboration in the Workplace. Proceedings of the BCS HCI 2012 People & Computers XXVI, Birmingham, UK

Videos

Video 1: An overview of the cross-modal collaborative diagram editor and its interactive features

Video 2: Example videos from deployment of the cross-modal tool in real workplace scenarios.

A version of this article was originally published in the CS4FN magazine.

About the Author